Sqoop импортирует все таблицы MySQL в HDFS.

1. sqoop-import-all-tables импортирует несколько таблиц

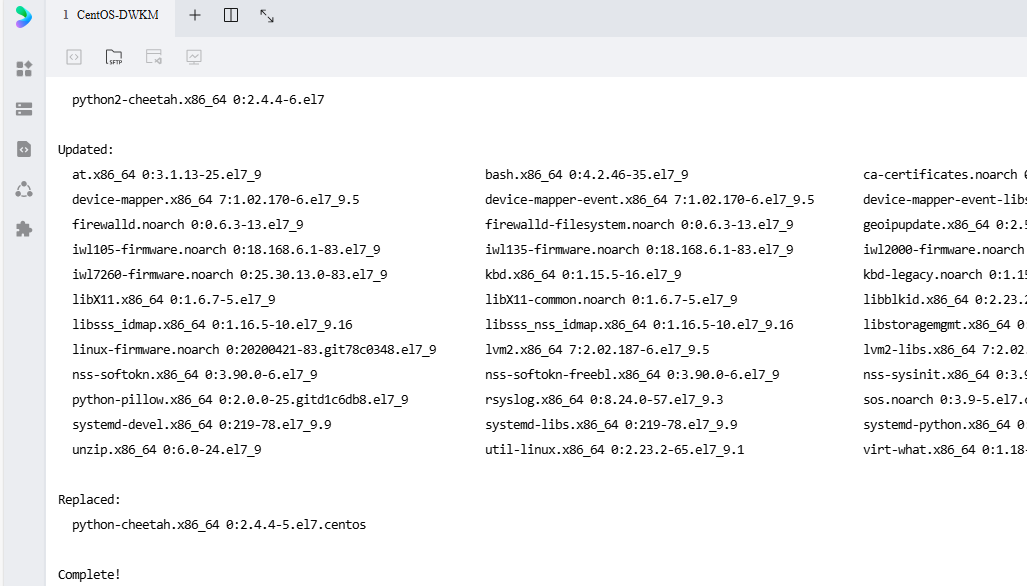

[root@node1 sqoop-1.4.7]# bin/sqoop-import-all-tables --connect jdbc:mysql://node1:3306/esdb --username root --password 123456 --as-textfile --warehouse-dir /user/root

Warning: /opt/sqoop-1.4.7/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

18/05/24 14:58:06 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

18/05/24 14:58:06 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/05/24 14:58:06 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/05/24 14:58:06 INFO tool.CodeGenTool: Beginning code generation

18/05/24 14:58:06 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `files` AS t LIMIT 1

18/05/24 14:58:06 ERROR manager.SqlManager: Error reading from database: java.sql.SQLException: Streaming result set com.mysql.jdbc.RowDataDynamic@1817d444 is still active. No statements may be issued when any streaming result sets are open and in use on a given connection. Ensure that you have called .close() on any active streaming result sets before attempting more queries.

java.sql.SQLException: Streaming result set com.mysql.jdbc.RowDataDynamic@1817d444 is still active. No statements may be issued when any streaming result sets are open and in use on a given connection. Ensure that you have called .close() on any active streaming result sets before attempting more queries.

at com.mysql.jdbc.SQLError.createSQLException(SQLError.java:931)

at com.mysql.jdbc.MysqlIO.checkForOutstandingStreamingData(MysqlIO.java:2518)

at com.mysql.jdbc.MysqlIO.sendCommand(MysqlIO.java:1748)

at com.mysql.jdbc.MysqlIO.sqlQueryDirect(MysqlIO.java:1961)

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2537)

at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2466)

at com.mysql.jdbc.StatementImpl.executeQuery(StatementImpl.java:1383)

at com.mysql.jdbc.ConnectionImpl.getMaxBytesPerChar(ConnectionImpl.java:2939)

at com.mysql.jdbc.Field.getMaxBytesPerCharacter(Field.java:576)

at com.mysql.jdbc.ResultSetMetaData.getPrecision(ResultSetMetaData.java:440)

at org.apache.sqoop.manager.SqlManager.getColumnInfoForRawQuery(SqlManager.java:305)

at org.apache.sqoop.manager.SqlManager.getColumnTypesForRawQuery(SqlManager.java:260)

at org.apache.sqoop.manager.SqlManager.getColumnTypes(SqlManager.java:246)

at org.apache.sqoop.manager.ConnManager.getColumnTypes(ConnManager.java:327)

at org.apache.sqoop.orm.ClassWriter.getColumnTypes(ClassWriter.java:1872)

at org.apache.sqoop.orm.ClassWriter.generate(ClassWriter.java:1671)

at org.apache.sqoop.tool.CodeGenTool.generateORM(CodeGenTool.java:106)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:501)

at org.apache.sqoop.tool.ImportAllTablesTool.run(ImportAllTablesTool.java:110)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

18/05/24 14:58:06 ERROR tool.ImportAllTablesTool: Encountered IOException running import job: java.io.IOException: No columns to generate for ClassWriter

[root@node1 sqoop-1.4.7]#Поскольку драйвер MySQL, ранее загруженный в библиотеку Sqoop, немного слабый, просто обновите его до mysql-connector-java-5.1.32-bin.jar.

[root@node1 ~]# ls /opt/sqoop-1.4.7/lib |grep mysql

mysql-connector-java-5.1.32-bin.jar

[root@node1 ~]#2. Выполните еще раз

[root@node1 sqoop-1.4.7]# bin/sqoop-import-all-tables --connect jdbc:mysql://node1:3306/esdb --username root --password 123456 --as-textfile --warehouse-dir /user/root

Warning: /opt/sqoop-1.4.7/bin/../../hbase does not exist! HBase imports will fail.

Please set $HBASE_HOME to the root of your HBase installation.

18/05/24 15:03:33 INFO sqoop.Sqoop: Running Sqoop version: 1.4.7

18/05/24 15:03:33 WARN tool.BaseSqoopTool: Setting your password on the command-line is insecure. Consider using -P instead.

18/05/24 15:03:34 INFO manager.MySQLManager: Preparing to use a MySQL streaming resultset.

18/05/24 15:03:34 INFO tool.CodeGenTool: Beginning code generation

18/05/24 15:03:34 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `files` AS t LIMIT 1

18/05/24 15:03:34 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `files` AS t LIMIT 1

18/05/24 15:03:34 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/hadoop-2.7.5

Note: /tmp/sqoop-root/compile/6a64974699f84d472b1e4438782a3423/files.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/05/24 15:03:39 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/6a64974699f84d472b1e4438782a3423/files.jar

18/05/24 15:03:39 WARN manager.MySQLManager: It looks like you are importing from mysql.

18/05/24 15:03:39 WARN manager.MySQLManager: This transfer can be faster! Use the --direct

18/05/24 15:03:39 WARN manager.MySQLManager: option to exercise a MySQL-specific fast path.

18/05/24 15:03:39 INFO manager.MySQLManager: Setting zero DATETIME behavior to convertToNull (mysql)

18/05/24 15:03:39 INFO mapreduce.ImportJobBase: Beginning import of files

18/05/24 15:03:39 INFO Configuration.deprecation: mapred.jar is deprecated. Instead, use mapreduce.job.jar

18/05/24 15:03:40 INFO Configuration.deprecation: mapred.map.tasks is deprecated. Instead, use mapreduce.job.maps

18/05/24 15:03:40 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

18/05/24 15:03:43 INFO db.DBInputFormat: Using read commited transaction isolation

18/05/24 15:03:43 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `files`

18/05/24 15:03:43 INFO db.IntegerSplitter: Split size: 810; Num splits: 4 from: 1 to: 3244

18/05/24 15:03:43 INFO mapreduce.JobSubmitter: number of splits:4

18/05/24 15:03:43 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1526097883376_0013

18/05/24 15:03:44 INFO impl.YarnClientImpl: Submitted application application_1526097883376_0013

18/05/24 15:03:44 INFO mapreduce.Job: The url to track the job: http://bigdata03-test:8088/proxy/application_1526097883376_0013/

18/05/24 15:03:44 INFO mapreduce.Job: Running job: job_1526097883376_0013

18/05/24 15:03:51 INFO mapreduce.Job: Job job_1526097883376_0013 running in uber mode : false

18/05/24 15:03:51 INFO mapreduce.Job: map 0% reduce 0%

18/05/24 15:03:57 INFO mapreduce.Job: map 25% reduce 0%

18/05/24 15:03:58 INFO mapreduce.Job: map 75% reduce 0%

18/05/24 15:03:59 INFO mapreduce.Job: map 100% reduce 0%

18/05/24 15:03:59 INFO mapreduce.Job: Job job_1526097883376_0013 completed successfully

18/05/24 15:03:59 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=570260

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=412

HDFS: Number of bytes written=3799556

HDFS: Number of read operations=16

HDFS: Number of large read operations=0

HDFS: Number of write operations=8

Job Counters

Launched map tasks=4

Other local map tasks=4

Total time spent by all maps in occupied slots (ms)=18756

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=18756

Total vcore-milliseconds taken by all map tasks=18756

Total megabyte-milliseconds taken by all map tasks=19206144

Map-Reduce Framework

Map input records=3244

Map output records=3244

Input split bytes=412

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=279

CPU time spent (ms)=7090

Physical memory (bytes) snapshot=727486464

Virtual memory (bytes) snapshot=8496140288

Total committed heap usage (bytes)=443023360

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=3799556

18/05/24 15:03:59 INFO mapreduce.ImportJobBase: Transferred 3.6235 MB in 19.1194 seconds (194.0698 KB/sec)

18/05/24 15:03:59 INFO mapreduce.ImportJobBase: Retrieved 3244 records.

18/05/24 15:03:59 INFO tool.CodeGenTool: Beginning code generation

18/05/24 15:03:59 INFO manager.SqlManager: Executing SQL statement: SELECT t.* FROM `logs` AS t LIMIT 1

18/05/24 15:03:59 INFO orm.CompilationManager: HADOOP_MAPRED_HOME is /opt/hadoop-2.7.5

Note: /tmp/sqoop-root/compile/6a64974699f84d472b1e4438782a3423/logs.java uses or overrides a deprecated API.

Note: Recompile with -Xlint:deprecation for details.

18/05/24 15:03:59 INFO orm.CompilationManager: Writing jar file: /tmp/sqoop-root/compile/6a64974699f84d472b1e4438782a3423/logs.jar

18/05/24 15:03:59 INFO mapreduce.ImportJobBase: Beginning import of logs

18/05/24 15:03:59 INFO client.ConfiguredRMFailoverProxyProvider: Failing over to rm2

18/05/24 15:04:01 INFO db.DBInputFormat: Using read commited transaction isolation

18/05/24 15:04:01 INFO db.DataDrivenDBInputFormat: BoundingValsQuery: SELECT MIN(`id`), MAX(`id`) FROM `logs`

18/05/24 15:04:01 INFO db.IntegerSplitter: Split size: 0; Num splits: 4 from: 1 to: 1

18/05/24 15:04:01 INFO mapreduce.JobSubmitter: number of splits:1

18/05/24 15:04:01 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1526097883376_0014

18/05/24 15:04:01 INFO impl.YarnClientImpl: Submitted application application_1526097883376_0014

18/05/24 15:04:01 INFO mapreduce.Job: The url to track the job: http://bigdata03-test:8088/proxy/application_1526097883376_0014/

18/05/24 15:04:01 INFO mapreduce.Job: Running job: job_1526097883376_0014

18/05/24 15:04:10 INFO mapreduce.Job: Job job_1526097883376_0014 running in uber mode : false

18/05/24 15:04:10 INFO mapreduce.Job: map 0% reduce 0%

18/05/24 15:04:17 INFO mapreduce.Job: map 100% reduce 0%

18/05/24 15:04:17 INFO mapreduce.Job: Job job_1526097883376_0014 completed successfully

18/05/24 15:04:17 INFO mapreduce.Job: Counters: 30

File System Counters

FILE: Number of bytes read=0

FILE: Number of bytes written=142495

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=99

HDFS: Number of bytes written=47

HDFS: Number of read operations=4

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

Job Counters

Launched map tasks=1

Other local map tasks=1

Total time spent by all maps in occupied slots (ms)=4490

Total time spent by all reduces in occupied slots (ms)=0

Total time spent by all map tasks (ms)=4490

Total vcore-milliseconds taken by all map tasks=4490

Total megabyte-milliseconds taken by all map tasks=4597760

Map-Reduce Framework

Map input records=1

Map output records=1

Input split bytes=99

Spilled Records=0

Failed Shuffles=0

Merged Map outputs=0

GC time elapsed (ms)=72

CPU time spent (ms)=1560

Physical memory (bytes) snapshot=179109888

Virtual memory (bytes) snapshot=2121519104

Total committed heap usage (bytes)=108003328

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=47

18/05/24 15:04:17 INFO mapreduce.ImportJobBase: Transferred 47 bytes in 17.8489 seconds (2.6332 bytes/sec)

18/05/24 15:04:17 INFO mapreduce.ImportJobBase: Retrieved 1 records.

[root@node1 sqoop-1.4.7]# Всего было импортировано 2 таблицы.

[root@node1 ~]# hdfs dfs -ls /user/root

Found 5 items

drwxr-xr-x - root supergroup 0 2018-05-24 15:03 /user/root/files

drwxr-xr-x - root supergroup 0 2018-04-23 14:05 /user/root/input

drwxr-xr-x - root supergroup 0 2018-05-24 15:04 /user/root/logs

drwxr-xr-x - root supergroup 0 2018-05-22 13:36 /user/root/users

[root@node1 ~]# hdfs dfs -ls /user/root/files

Found 5 items

-rw-r--r-- 3 root supergroup 0 2018-05-24 15:03 /user/root/files/_SUCCESS

-rw-r--r-- 3 root supergroup 895593 2018-05-24 15:03 /user/root/files/part-m-00000

-rw-r--r-- 3 root supergroup 912033 2018-05-24 15:03 /user/root/files/part-m-00001

-rw-r--r-- 3 root supergroup 1109032 2018-05-24 15:03 /user/root/files/part-m-00002

-rw-r--r-- 3 root supergroup 882898 2018-05-24 15:03 /user/root/files/part-m-00003

[root@node1 ~]#

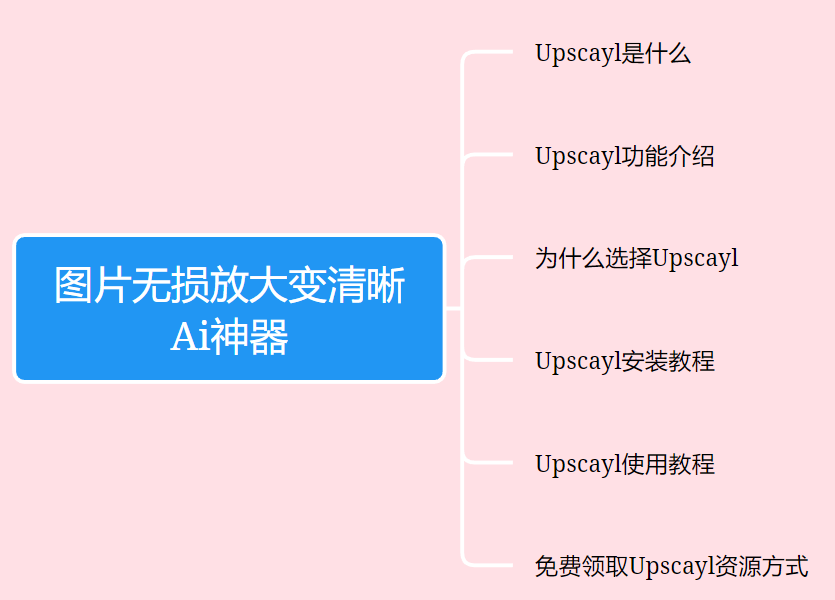

Неразрушающее увеличение изображений одним щелчком мыши, чтобы сделать их более четкими артефактами искусственного интеллекта, включая руководства по установке и использованию.

Копикодер: этот инструмент отлично работает с Cursor, Bolt и V0! Предоставьте более качественные подсказки для разработки интерфейса (создание навигационного веб-сайта с использованием искусственного интеллекта).

Новый бесплатный RooCline превосходит Cline v3.1? ! Быстрее, умнее и лучше вилка Cline! (Независимое программирование AI, порог 0)

Разработав более 10 проектов с помощью Cursor, я собрал 10 примеров и 60 подсказок.

Я потратил 72 часа на изучение курсорных агентов, и вот неоспоримые факты, которыми я должен поделиться!

Идеальная интеграция Cursor и DeepSeek API

DeepSeek V3 снижает затраты на обучение больших моделей

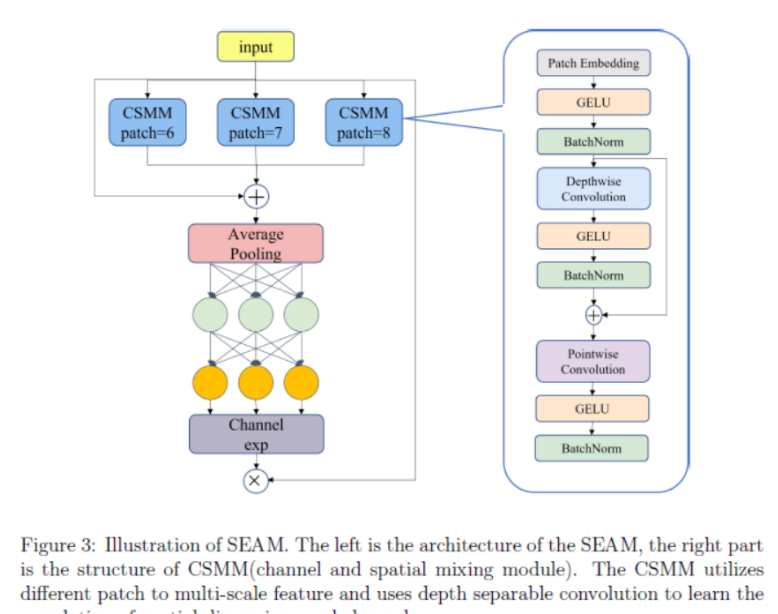

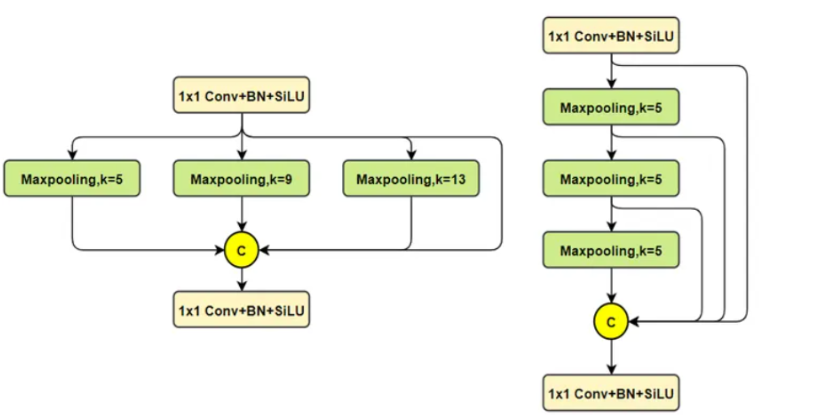

Артефакт, увеличивающий количество очков: на основе улучшения характеристик препятствия малым целям Yolov8 (SEAM, MultiSEAM).

DeepSeek V3 раскручивался уже три дня. Сегодня я попробовал самопровозглашенную модель «ChatGPT».

Open Devin — инженер-программист искусственного интеллекта с открытым исходным кодом, который меньше программирует и больше создает.

Эксклюзивное оригинальное улучшение YOLOv8: собственная разработка SPPF | SPPF сочетается с воспринимаемой большой сверткой ядра UniRepLK, а свертка с большим ядром + без расширения улучшает восприимчивое поле

Популярное и подробное объяснение DeepSeek-V3: от его появления до преимуществ и сравнения с GPT-4o.

9 основных словесных инструкций по доработке академических работ с помощью ChatGPT, эффективных и практичных, которые стоит собрать

Вызовите deepseek в vscode для реализации программирования с помощью искусственного интеллекта.

Познакомьтесь с принципами сверточных нейронных сетей (CNN) в одной статье (суперподробно)

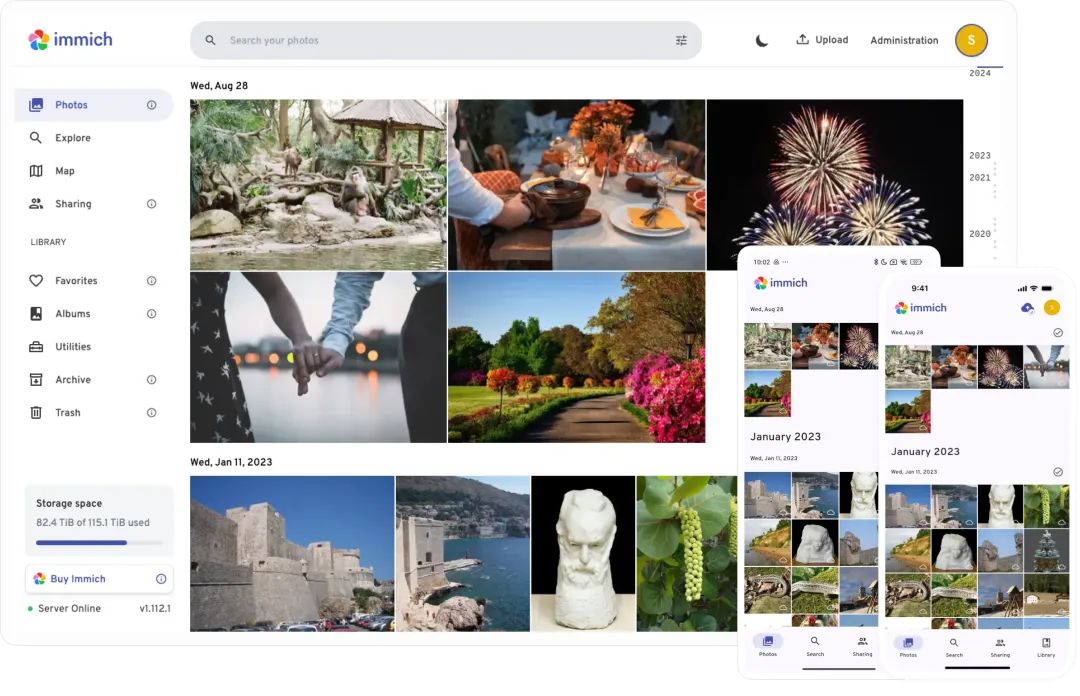

50,3 тыс. звезд! Immich: автономное решение для резервного копирования фотографий и видео, которое экономит деньги и избавляет от беспокойства.

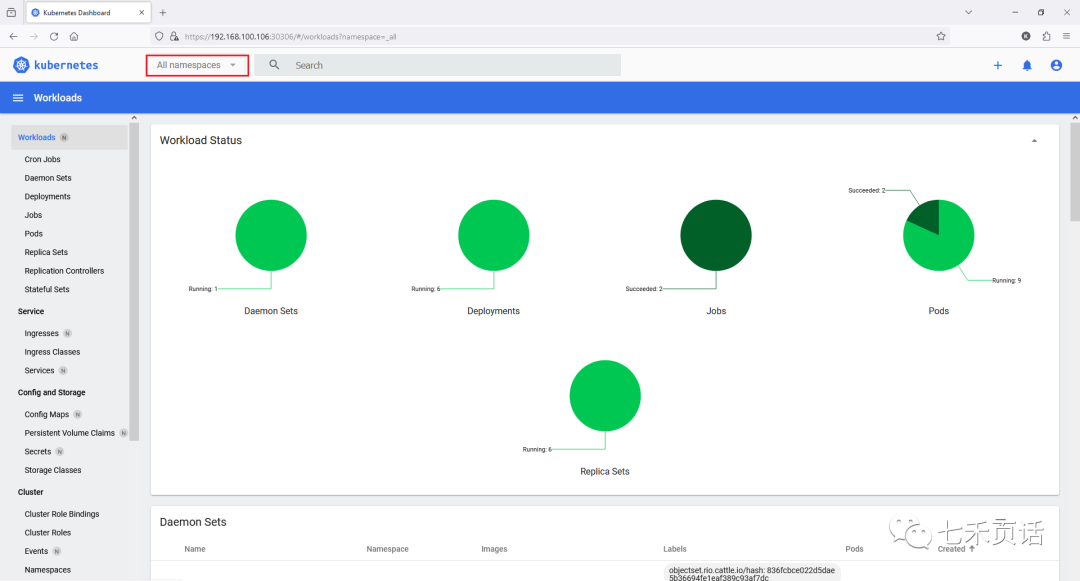

Cloud Native|Практика: установка Dashbaord для K8s, графика неплохая

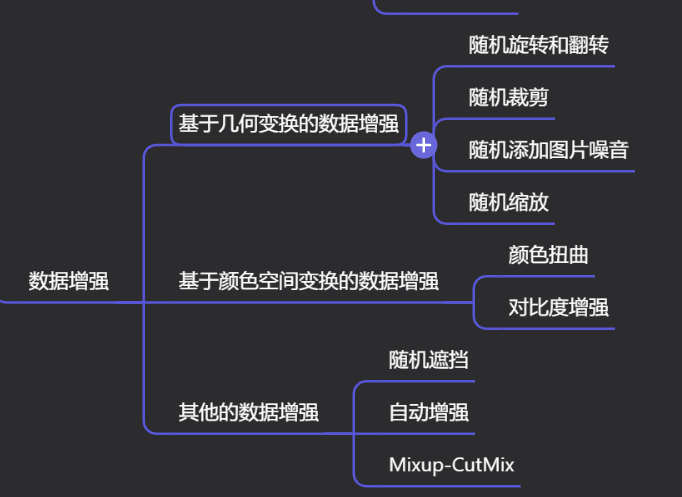

Краткий обзор статьи — использование синтетических данных при обучении больших моделей и оптимизации производительности

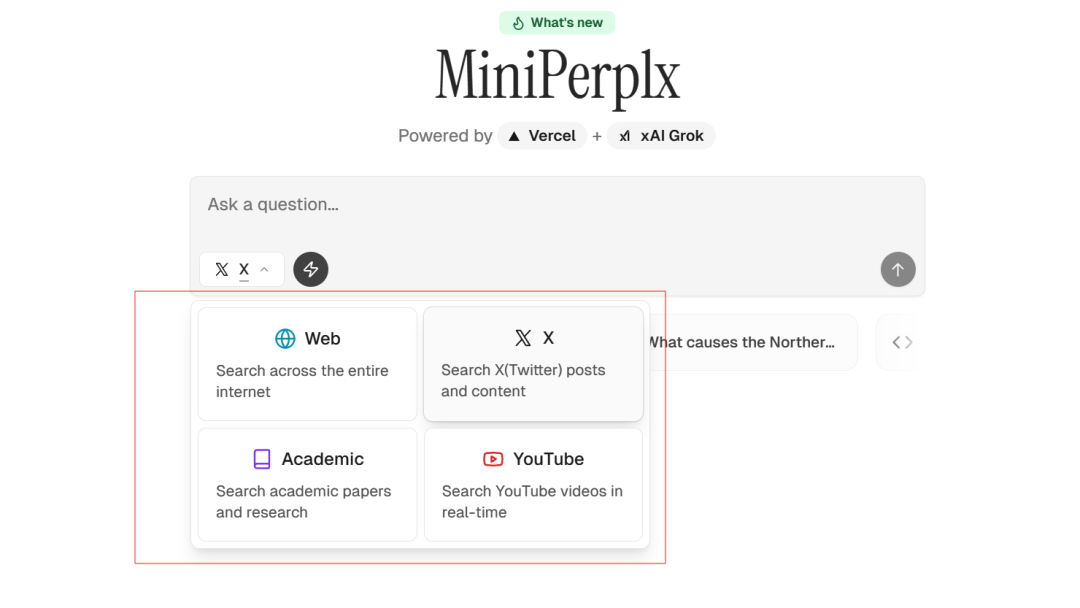

MiniPerplx: новая поисковая система искусственного интеллекта с открытым исходным кодом, спонсируемая xAI и Vercel.

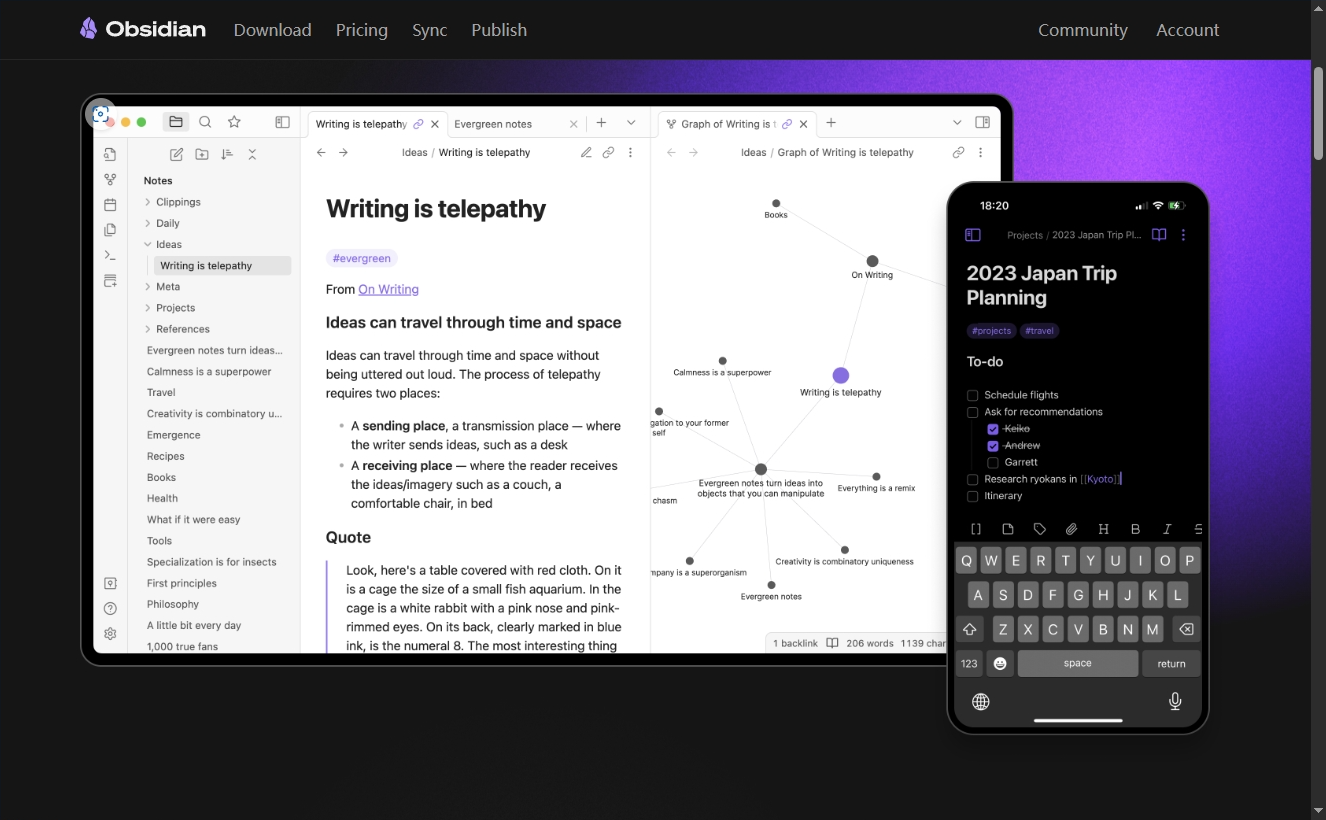

Конструкция сервиса Synology Drive сочетает проникновение в интрасеть и синхронизацию папок заметок Obsidian в облаке.

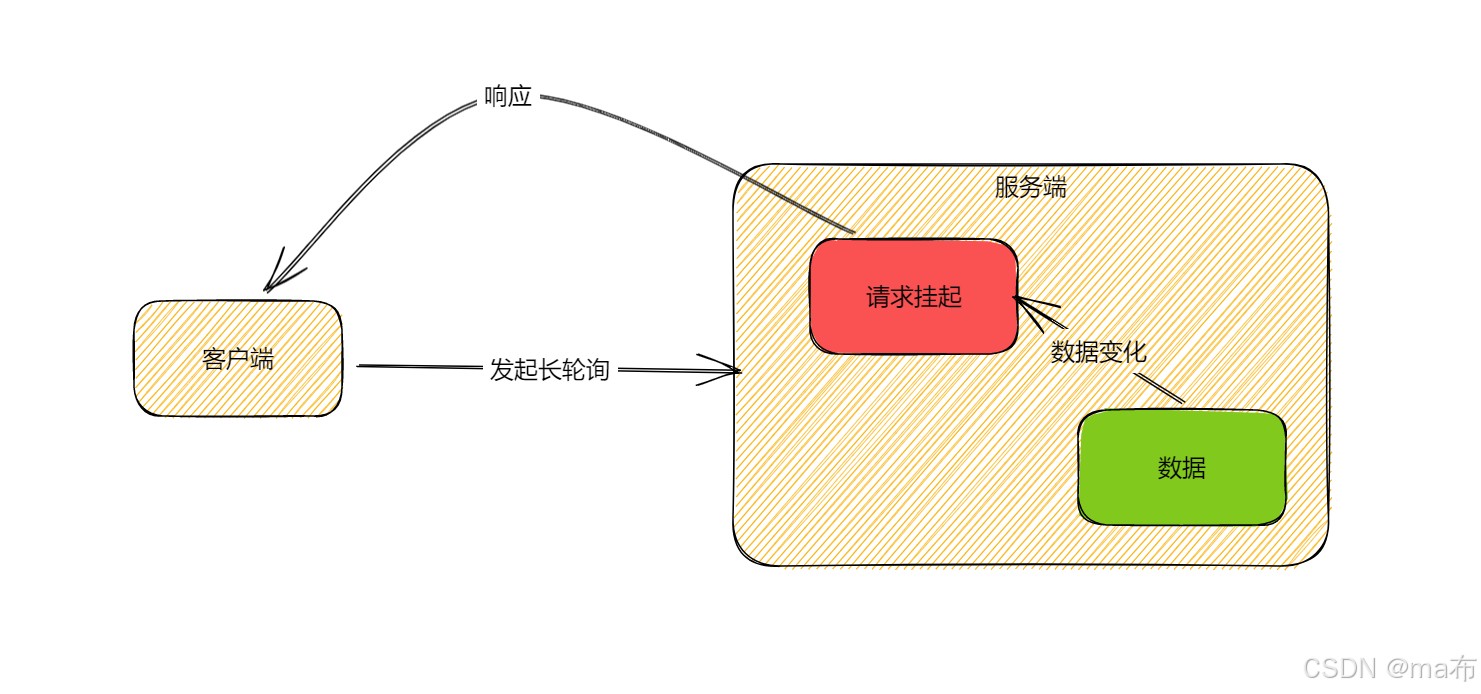

Центр конфигурации————Накос

Начинаем с нуля при разработке в облаке Copilot: начать разработку с минимальным использованием кода стало проще

[Серия Docker] Docker создает мультиплатформенные образы: практика архитектуры Arm64

Обновление новых возможностей coze | Я использовал coze для создания апплета помощника по исправлению домашних заданий по математике

Советы по развертыванию Nginx: практическое создание статических веб-сайтов на облачных серверах

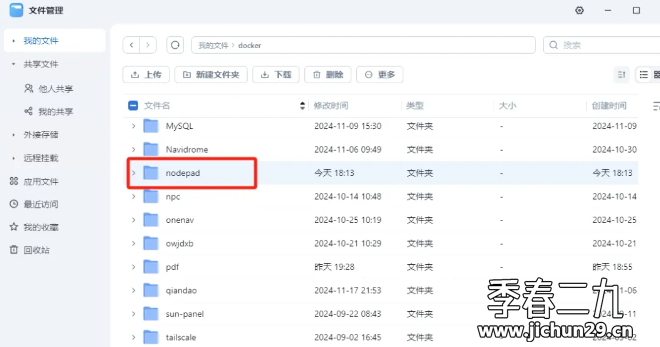

Feiniu fnos использует Docker для развертывания личного блокнота Notepad

Сверточная нейронная сеть VGG реализует классификацию изображений Cifar10 — практический опыт Pytorch

Начало работы с EdgeonePages — новым недорогим решением для хостинга веб-сайтов

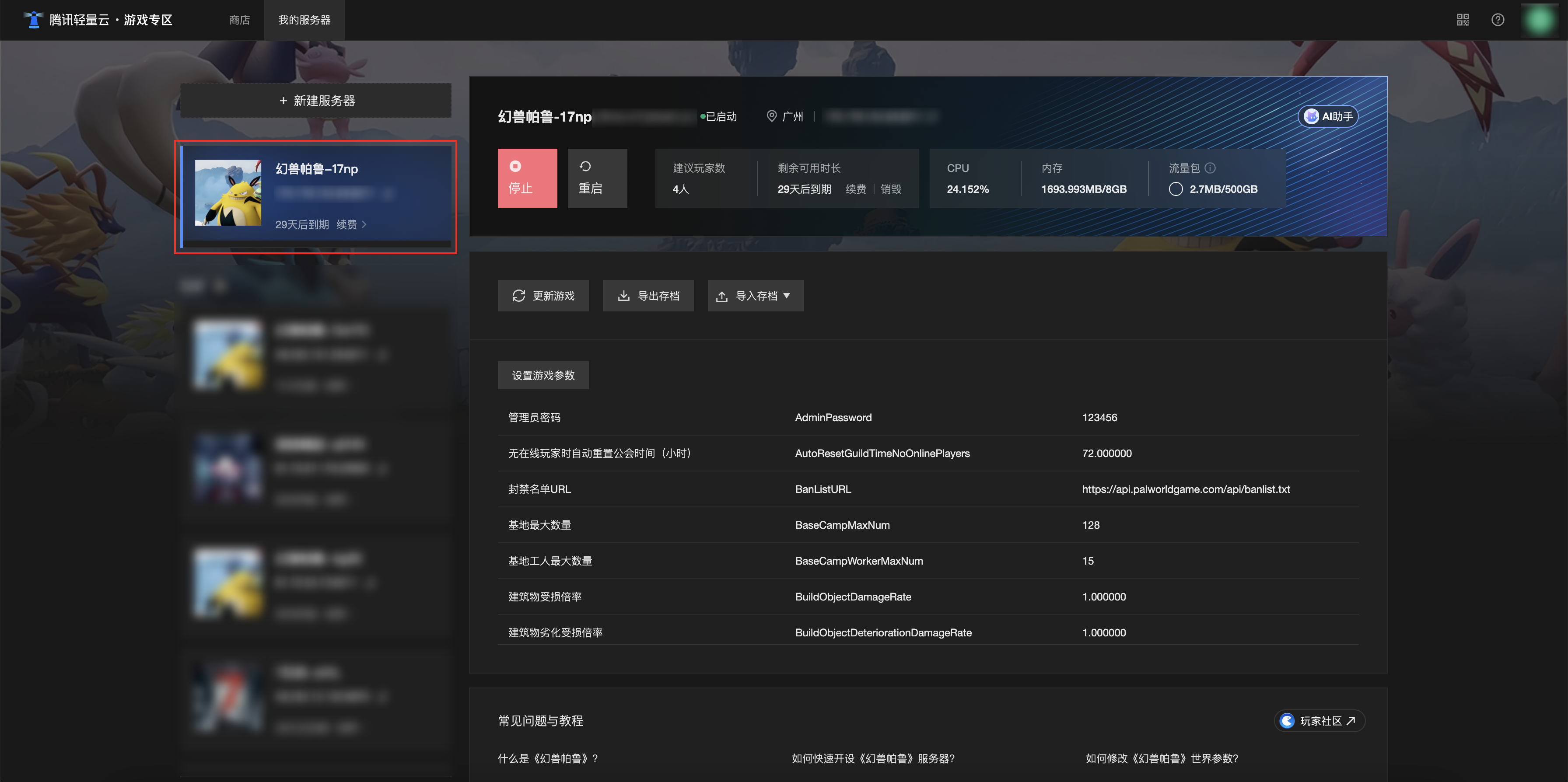

[Зона легкого облачного игрового сервера] Управление игровыми архивами