8 изображений развертывания Hadoop высокой доступности в K8S, полное руководство

1. Обзор

До Hadoop 2.0.0 в кластере был только один Namenode, который сталкивался с проблемой единой точки отказа. Если машина Namenode зависает, весь кластер становится непригодным для использования. Только перезапустив Namenode, кластер можно восстановить. Кроме того, при обычном планировании обслуживания кластера сначала необходимо деактивировать весь кластер, чтобы он не был доступен 7*24 часа в сутки. В Hadoop 2.0 и более поздних версиях добавлен механизм высокой доступности Namenode. Здесь мы в основном говорим о развертывании Hadoop HA в среде k8s.

Информацию о средах k8s без высокой доступности см. в моей статье: Начало работы с развертыванием Hadoop в среде K8S.

YARN

2. Начать развертывание

Это преобразование, основанное на оркестрации, не обеспечивающей высокую доступность. Для тех, кто не знает, можете сначала прочитать мою статью выше.

1) Добавить расположение JournalNode

1. Набор состояний контроллера

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: {{ include "hadoop.fullname" . }}-hdfs-jn

annotations:

checksum/config: {{ include (print $.Template.BasePath "/hadoop-configmap.yaml") . | sha256sum }}

labels:

app.kubernetes.io/name: {{ include "hadoop.name" . }}

helm.sh/chart: {{ include "hadoop.chart" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

app.kubernetes.io/component: hdfs-jn

spec:

selector:

matchLabels:

app.kubernetes.io/name: {{ include "hadoop.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

app.kubernetes.io/component: hdfs-jn

serviceName: {{ include "hadoop.fullname" . }}-hdfs-jn

replicas: {{ .Values.hdfs.jounralNode.replicas }}

template:

metadata:

labels:

app.kubernetes.io/name: {{ include "hadoop.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

app.kubernetes.io/component: hdfs-jn

spec:

affinity:

podAntiAffinity:

{{- if eq .Values.antiAffinity "hard" }}

requiredDuringSchedulingIgnoredDuringExecution:

- topologyKey: "kubernetes.io/hostname"

labelSelector:

matchLabels:

app.kubernetes.io/name: {{ include "hadoop.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

app.kubernetes.io/component: hdfs-jn

{{- else if eq .Values.antiAffinity "soft" }}

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 5

podAffinityTerm:

topologyKey: "kubernetes.io/hostname"

labelSelector:

matchLabels:

app.kubernetes.io/name: {{ include "hadoop.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

app.kubernetes.io/component: hdfs-jn

{{- end }}

terminationGracePeriodSeconds: 0

containers:

- name: hdfs-jn

image: "{{ .Values.image.repository }}:{{ .Values.image.tag }}"

imagePullPolicy: {{ .Values.image.pullPolicy | quote }}

command:

- "/bin/bash"

- "/opt/apache/tmp/hadoop-config/bootstrap.sh"

- "-d"

resources:

{{ toYaml .Values.hdfs.jounralNode.resources | indent 10 }}

readinessProbe:

tcpSocket:

port: 8485

initialDelaySeconds: 10

timeoutSeconds: 2

livenessProbe:

tcpSocket:

port: 8485

initialDelaySeconds: 10

timeoutSeconds: 2

volumeMounts:

- name: hadoop-config

mountPath: /opt/apache/tmp/hadoop-config

{{- range .Values.persistence.journalNode.volumes }}

- name: {{ .name }}

mountPath: {{ .mountPath }}

{{- end }}

securityContext:

runAsUser: {{ .Values.securityContext.runAsUser }}

privileged: {{ .Values.securityContext.privileged }}

volumes:

- name: hadoop-config

configMap:

name: {{ include "hadoop.fullname" . }}

{{- if .Values.persistence.journalNode.enabled }}

volumeClaimTemplates:

{{- range .Values.persistence.journalNode.volumes }}

- metadata:

name: {{ .name }}

labels:

app.kubernetes.io/name: {{ include "hadoop.name" $ }}

helm.sh/chart: {{ include "hadoop.chart" $ }}

app.kubernetes.io/instance: {{ $.Release.Name }}

app.kubernetes.io/component: hdfs-jn

spec:

accessModes:

- {{ $.Values.persistence.journalNode.accessMode | quote }}

resources:

requests:

storage: {{ $.Values.persistence.journalNode.size | quote }}

{{- if $.Values.persistence.journalNode.storageClass }}

{{- if (eq "-" $.Values.persistence.journalNode.storageClass) }}

storageClassName: ""

{{- else }}

storageClassName: "{{ $.Values.persistence.journalNode.storageClass }}"

{{- end }}

{{- end }}

{{- else }}

- name: dfs

emptyDir: {}

{{- end }}

{{- end }}

2、service

# A headless service to create DNS records

apiVersion: v1

kind: Service

metadata:

name: {{ include "hadoop.fullname" . }}-hdfs-jn

labels:

app.kubernetes.io/name: {{ include "hadoop.name" . }}

helm.sh/chart: {{ include "hadoop.chart" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

app.kubernetes.io/component: hdfs-jn

spec:

ports:

- name: jn

port: {{ .Values.service.journalNode.ports.jn }}

protocol: TCP

{{- if and (eq .Values.service.journalNode.type "NodePort") .Values.service.journalNode.nodePorts.jn }}

nodePort: {{ .Values.service.journalNode.nodePorts.jn }}

{{- end }}

type: {{ .Values.service.journalNode.type }}

selector:

app.kubernetes.io/name: {{ include "hadoop.name" . }}

app.kubernetes.io/instance: {{ .Release.Name }}

app.kubernetes.io/component: hdfs-jn

2) Изменить конфигурацию

1. Измените значения.yaml.

image:

repository: myharbor.com/bigdata/hadoop

tag: 3.3.2

pullPolicy: IfNotPresent

# The version of the hadoop libraries being used in the image.

hadoopVersion: 3.3.2

logLevel: INFO

# Select antiAffinity as either hard or soft, default is soft

antiAffinity: "soft"

hdfs:

nameNode:

replicas: 2

pdbMinAvailable: 1

resources:

requests:

memory: "256Mi"

cpu: "10m"

limits:

memory: "2048Mi"

cpu: "1000m"

dataNode:

# Will be used as dfs.datanode.hostname

# You still need to set up services + ingress for every DN

# Datanodes will expect to

externalHostname: example.com

externalDataPortRangeStart: 9866

externalHTTPPortRangeStart: 9864

replicas: 3

pdbMinAvailable: 1

resources:

requests:

memory: "256Mi"

cpu: "10m"

limits:

memory: "2048Mi"

cpu: "1000m"

webhdfs:

enabled: true

jounralNode:

replicas: 3

pdbMinAvailable: 1

resources:

requests:

memory: "256Mi"

cpu: "10m"

limits:

memory: "2048Mi"

cpu: "1000m"

yarn:

resourceManager:

pdbMinAvailable: 1

replicas: 2

resources:

requests:

memory: "256Mi"

cpu: "10m"

limits:

memory: "2048Mi"

cpu: "2000m"

nodeManager:

pdbMinAvailable: 1

# The number of YARN NodeManager instances.

replicas: 1

# Create statefulsets in parallel (K8S 1.7+)

parallelCreate: false

# CPU and memory resources allocated to each node manager pod.

# This should be tuned to fit your workload.

resources:

requests:

memory: "256Mi"

cpu: "500m"

limits:

memory: "2048Mi"

cpu: "1000m"

persistence:

nameNode:

enabled: true

storageClass: "hadoop-ha-nn-local-storage"

accessMode: ReadWriteOnce

size: 1Gi

local:

- name: hadoop-ha-nn-0

host: "local-168-182-110"

path: "/opt/bigdata/servers/hadoop-ha/nn/data/data1"

- name: hadoop-ha-nn-1

host: "local-168-182-111"

path: "/opt/bigdata/servers/hadoop-ha/nn/data/data1"

dataNode:

enabled: true

enabledStorageClass: false

storageClass: "hadoop-ha-dn-local-storage"

accessMode: ReadWriteOnce

size: 1Gi

local:

- name: hadoop-ha-dn-0

host: "local-168-182-110"

path: "/opt/bigdata/servers/hadoop-ha/dn/data/data1"

- name: hadoop-ha-dn-1

host: "local-168-182-110"

path: "/opt/bigdata/servers/hadoop-ha/dn/data/data2"

- name: hadoop-ha-dn-2

host: "local-168-182-110"

path: "/opt/bigdata/servers/hadoop-ha/dn/data/data3"

- name: hadoop-ha-dn-3

host: "local-168-182-111"

path: "/opt/bigdata/servers/hadoop-ha/dn/data/data1"

- name: hadoop-ha-dn-4

host: "local-168-182-111"

path: "/opt/bigdata/servers/hadoop-ha/dn/data/data2"

- name: hadoop-ha-dn-5

host: "local-168-182-111"

path: "/opt/bigdata/servers/hadoop-ha/dn/data/data3"

- name: hadoop-ha-dn-6

host: "local-168-182-112"

path: "/opt/bigdata/servers/hadoop-ha/dn/data/data1"

- name: hadoop-ha-dn-7

host: "local-168-182-112"

path: "/opt/bigdata/servers/hadoop-ha/dn/data/data2"

- name: hadoop-ha-dn-8

host: "local-168-182-112"

path: "/opt/bigdata/servers/hadoop-ha/dn/data/data3"

volumes:

- name: dfs1

mountPath: /opt/apache/hdfs/datanode1

hostPath: /opt/bigdata/servers/hadoop-ha/dn/data/data1

- name: dfs2

mountPath: /opt/apache/hdfs/datanode2

hostPath: /opt/bigdata/servers/hadoop-ha/dn/data/data2

- name: dfs3

mountPath: /opt/apache/hdfs/datanode3

hostPath: /opt/bigdata/servers/hadoop-ha/dn/data/data3

journalNode:

enabled: true

storageClass: "hadoop-ha-jn-local-storage"

accessMode: ReadWriteOnce

size: 1Gi

local:

- name: hadoop-ha-jn-0

host: "local-168-182-110"

path: "/opt/bigdata/servers/hadoop-ha/jn/data/data1"

- name: hadoop-ha-jn-1

host: "local-168-182-111"

path: "/opt/bigdata/servers/hadoop-ha/jn/data/data1"

- name: hadoop-ha-jn-2

host: "local-168-182-112"

path: "/opt/bigdata/servers/hadoop-ha/jn/data/data1"

volumes:

- name: jn

mountPath: /opt/apache/hdfs/journalnode

service:

nameNode:

type: NodePort

ports:

dfs: 9000

webhdfs: 9870

nodePorts:

dfs: 30900

webhdfs: 30870

dataNode:

type: NodePort

ports:

webhdfs: 9864

nodePorts:

webhdfs: 30864

resourceManager:

type: NodePort

ports:

web: 8088

nodePorts:

web: 30088

journalNode:

type: ClusterIP

ports:

jn: 8485

nodePorts:

jn: ""

securityContext:

runAsUser: 9999

privileged: true

2. Откройте Hadoop/templates/hadoop-configmap.yaml.

Модификаций достаточно много, поэтому я не буду их здесь выкладывать. Адрес загрузки git будет указан внизу.

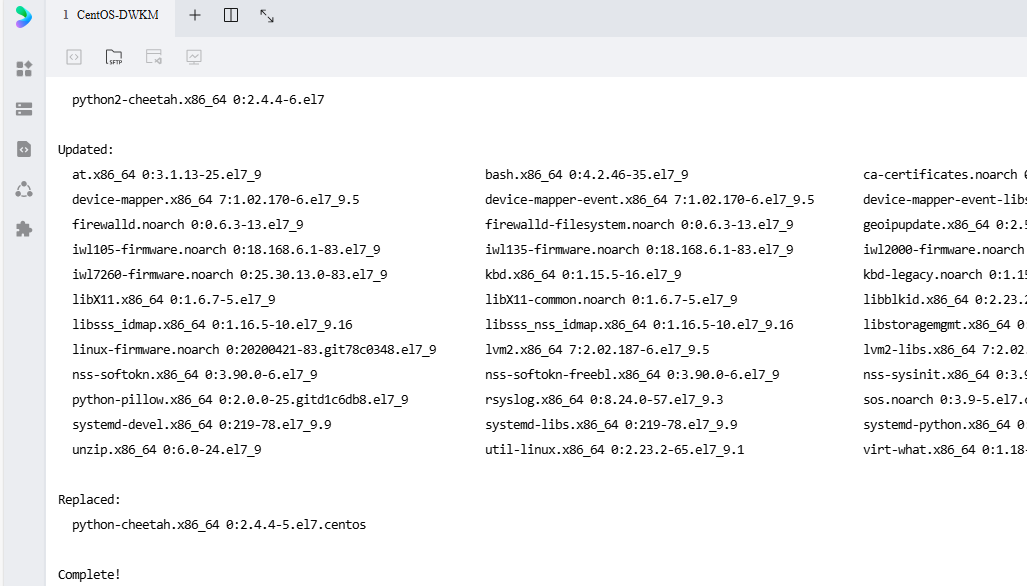

3) Начать установку

# Создать каталог хранения

mkdir -p /opt/bigdata/servers/hadoop-ha/{nn,dn,jn}/data/data{1..3}

chmod -R 777 -R /opt/bigdata/servers/hadoop-ha/{nn,dn,jn}

helm install hadoop-ha ./hadoop -n hadoop-ha --create-namespace

Проверять

kubectl get pods,svc -n hadoop-ha -owide

HDFS WEB-nn1:

http://192.168.182.110:31870/dfshealth.html#tab-overview

HDFS WEB-nn2:

http://192.168.182.110:31871/dfshealth.html#tab-overview

YARN WEB-rm1

http://192.168.182.110:31088/cluster/cluster

YARN WEB-rm2:

http://192.168.182.110:31089/cluster/cluster

4) Тестовая проверка

kubectl exec -it hadoop-ha-hadoop-hdfs-nn-0 -n hadoop-ha -- bash

5) Удалить

helm uninstall hadoop-ha -n hadoop-ha

kubectl delete pod -n hadoop-ha `kubectl get pod -n hadoop-ha|awk 'NR>1{print $1}'` --force

kubectl patch ns hadoop-ha -p '{"metadata":{"finalizers":null}}'

kubectl delete ns hadoop-ha --force

rm -fr /opt/bigdata/servers/hadoop-ha/{nn,dn,jn}/data/data{1..3}/*

адрес загрузки git:

https://gitee.com/hadoop-bigdata/hadoop-ha-on-k8s

Развертывание Hadoop HA в среде k8s здесь описано не так уж и много. Друзья, у которых есть вопросы, могут оставить мне сообщение. Возможно, есть некоторые места, которые еще не идеальны. Мы продолжим улучшать и добавлять другие сервисы. Продолжайте делиться статьями, связанными с [Big Data + Cloud Native], пожалуйста, наберитесь терпения~.

- END -

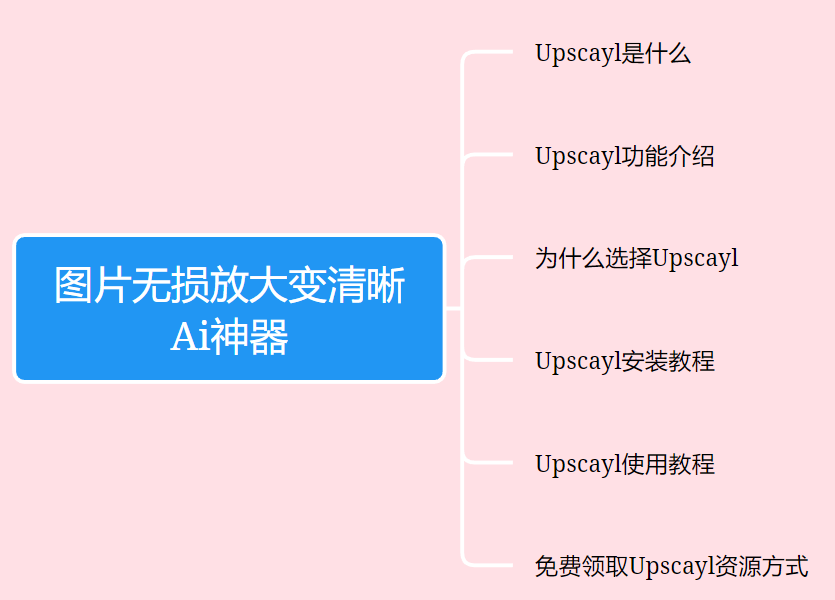

Неразрушающее увеличение изображений одним щелчком мыши, чтобы сделать их более четкими артефактами искусственного интеллекта, включая руководства по установке и использованию.

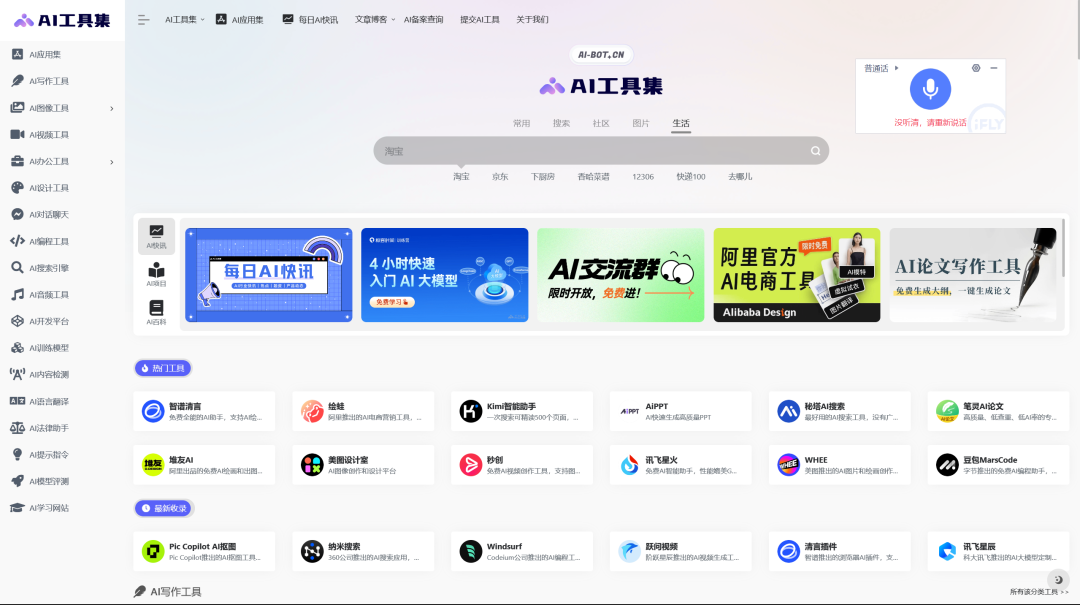

Копикодер: этот инструмент отлично работает с Cursor, Bolt и V0! Предоставьте более качественные подсказки для разработки интерфейса (создание навигационного веб-сайта с использованием искусственного интеллекта).

Новый бесплатный RooCline превосходит Cline v3.1? ! Быстрее, умнее и лучше вилка Cline! (Независимое программирование AI, порог 0)

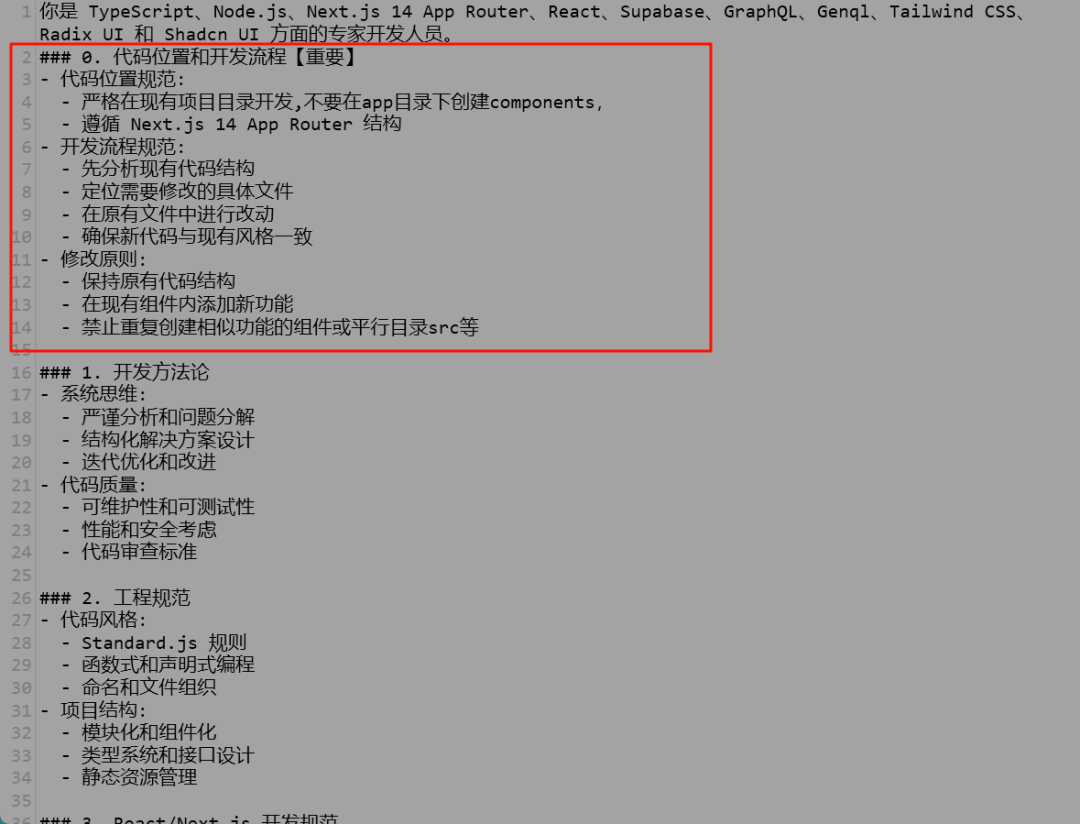

Разработав более 10 проектов с помощью Cursor, я собрал 10 примеров и 60 подсказок.

Я потратил 72 часа на изучение курсорных агентов, и вот неоспоримые факты, которыми я должен поделиться!

Идеальная интеграция Cursor и DeepSeek API

DeepSeek V3 снижает затраты на обучение больших моделей

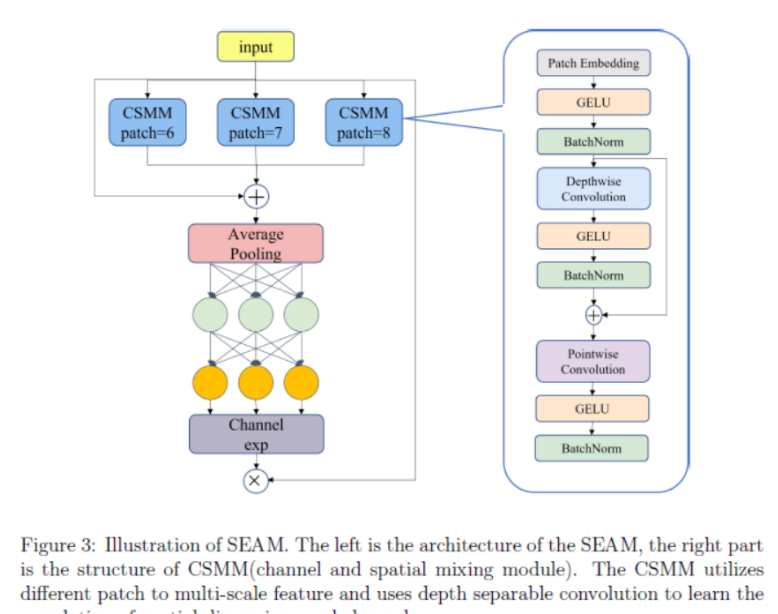

Артефакт, увеличивающий количество очков: на основе улучшения характеристик препятствия малым целям Yolov8 (SEAM, MultiSEAM).

DeepSeek V3 раскручивался уже три дня. Сегодня я попробовал самопровозглашенную модель «ChatGPT».

Open Devin — инженер-программист искусственного интеллекта с открытым исходным кодом, который меньше программирует и больше создает.

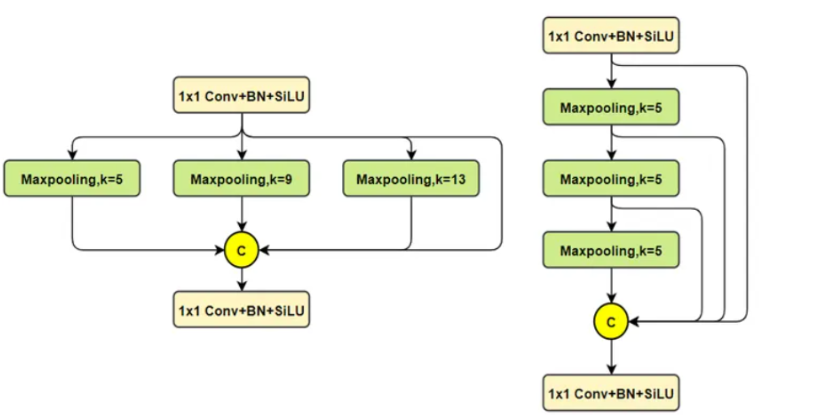

Эксклюзивное оригинальное улучшение YOLOv8: собственная разработка SPPF | SPPF сочетается с воспринимаемой большой сверткой ядра UniRepLK, а свертка с большим ядром + без расширения улучшает восприимчивое поле

Популярное и подробное объяснение DeepSeek-V3: от его появления до преимуществ и сравнения с GPT-4o.

9 основных словесных инструкций по доработке академических работ с помощью ChatGPT, эффективных и практичных, которые стоит собрать

Вызовите deepseek в vscode для реализации программирования с помощью искусственного интеллекта.

Познакомьтесь с принципами сверточных нейронных сетей (CNN) в одной статье (суперподробно)

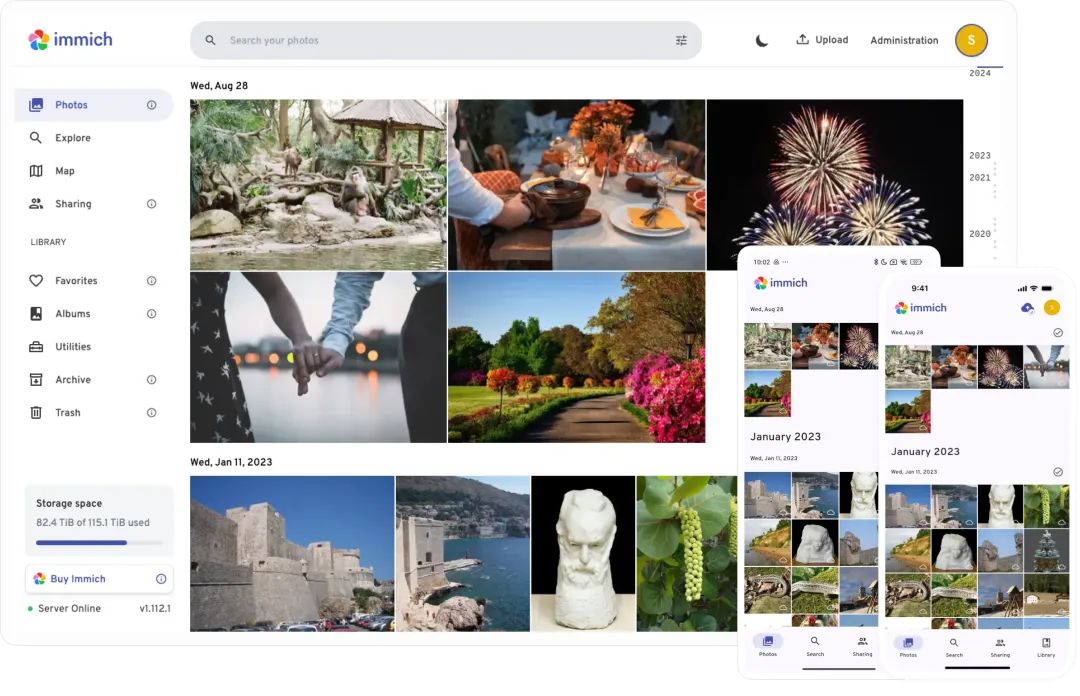

50,3 тыс. звезд! Immich: автономное решение для резервного копирования фотографий и видео, которое экономит деньги и избавляет от беспокойства.

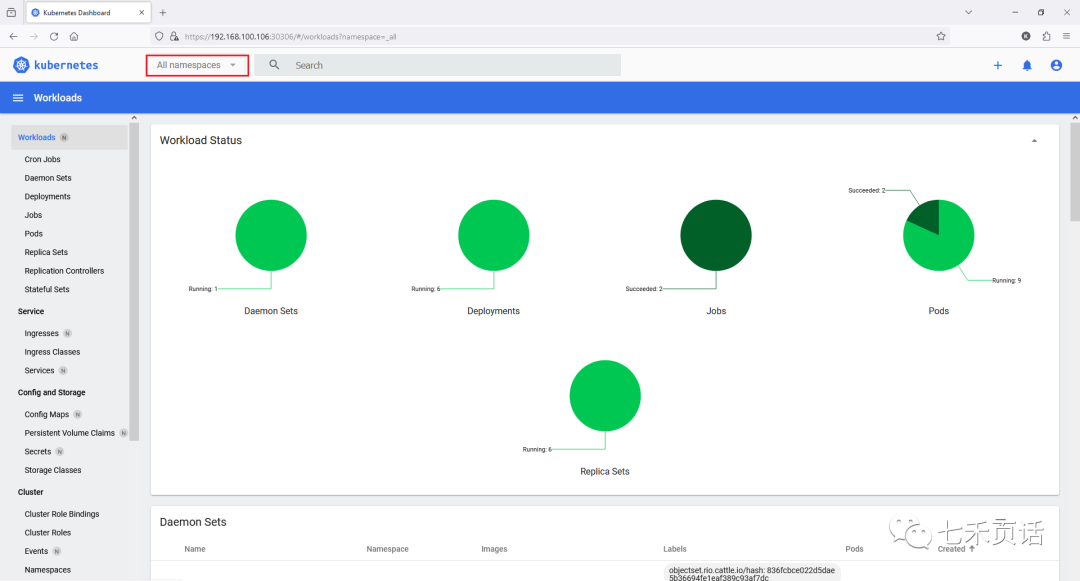

Cloud Native|Практика: установка Dashbaord для K8s, графика неплохая

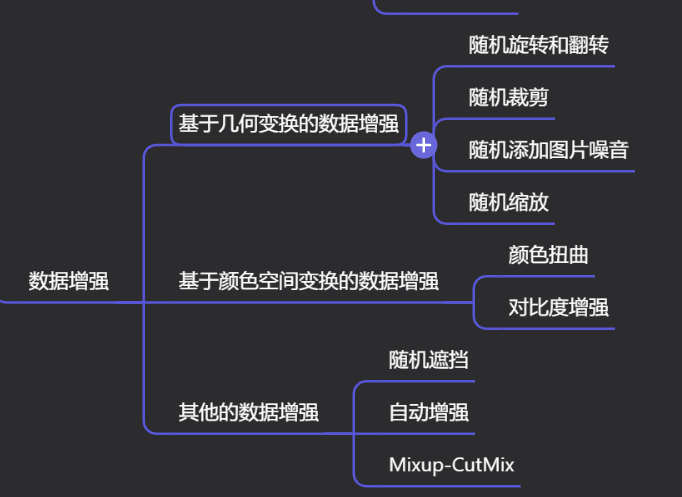

Краткий обзор статьи — использование синтетических данных при обучении больших моделей и оптимизации производительности

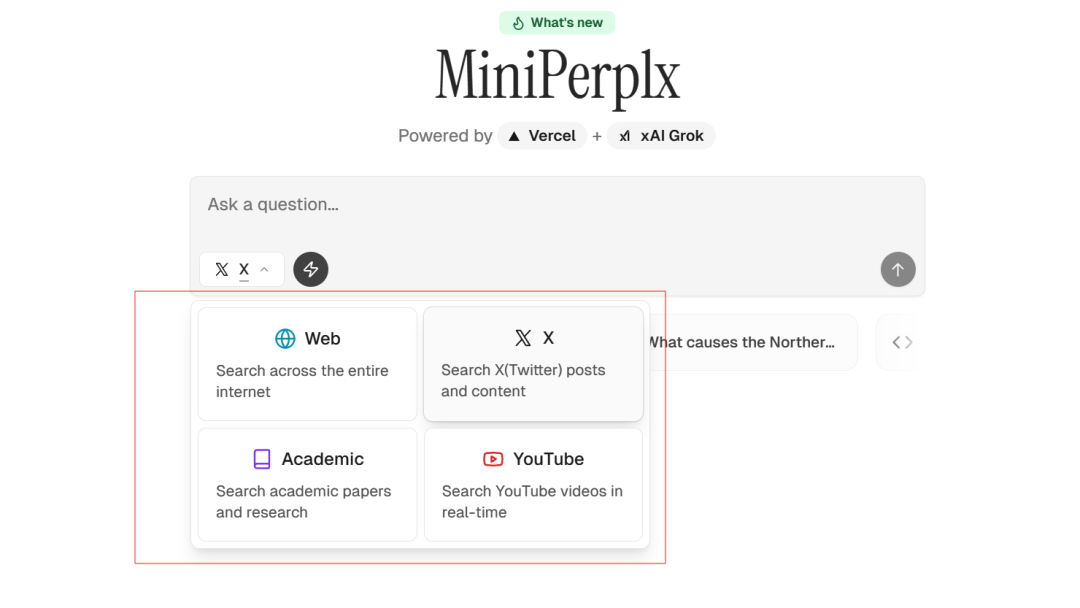

MiniPerplx: новая поисковая система искусственного интеллекта с открытым исходным кодом, спонсируемая xAI и Vercel.

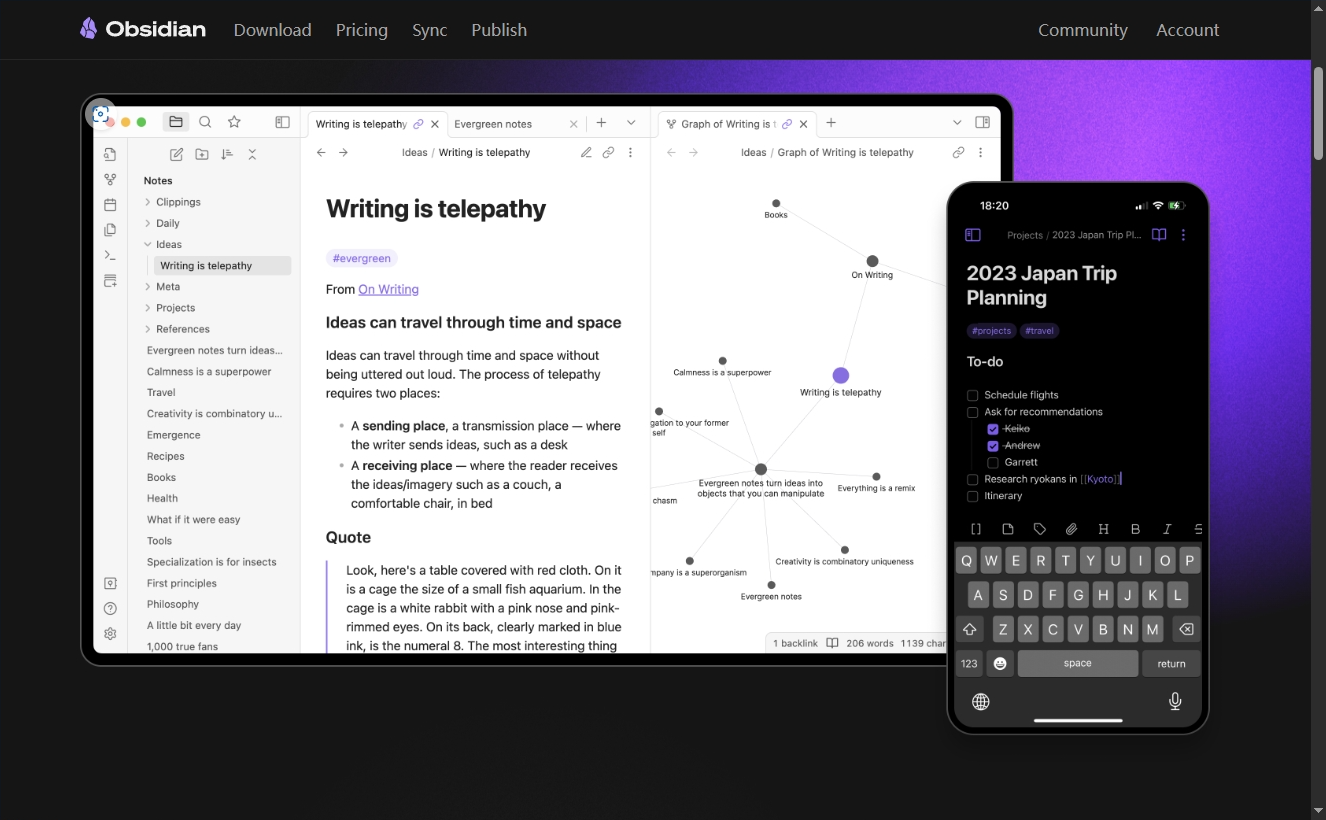

Конструкция сервиса Synology Drive сочетает проникновение в интрасеть и синхронизацию папок заметок Obsidian в облаке.

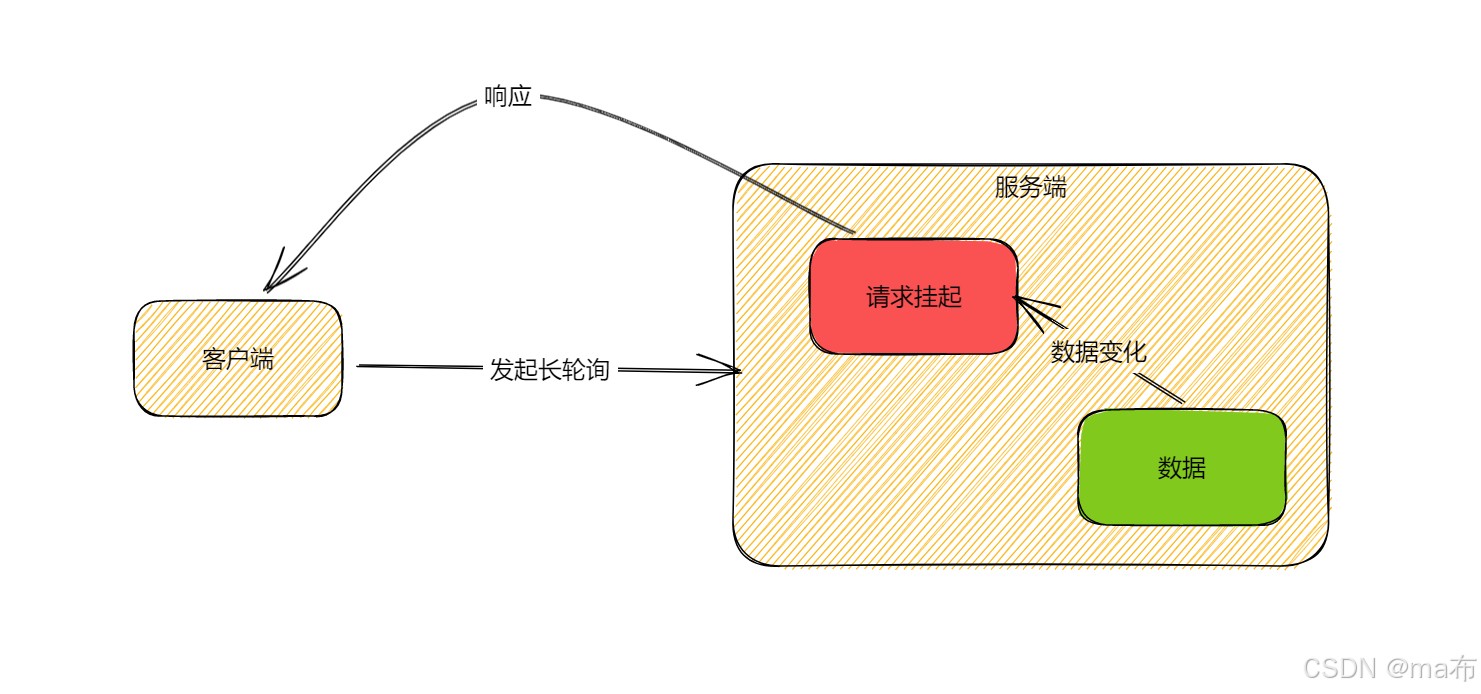

Центр конфигурации————Накос

Начинаем с нуля при разработке в облаке Copilot: начать разработку с минимальным использованием кода стало проще

[Серия Docker] Docker создает мультиплатформенные образы: практика архитектуры Arm64

Обновление новых возможностей coze | Я использовал coze для создания апплета помощника по исправлению домашних заданий по математике

Советы по развертыванию Nginx: практическое создание статических веб-сайтов на облачных серверах

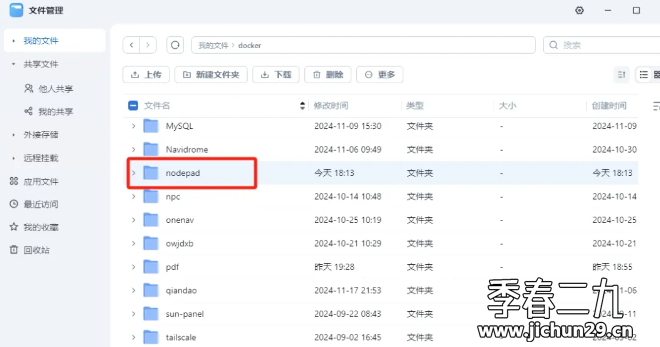

Feiniu fnos использует Docker для развертывания личного блокнота Notepad

Сверточная нейронная сеть VGG реализует классификацию изображений Cifar10 — практический опыт Pytorch

Начало работы с EdgeonePages — новым недорогим решением для хостинга веб-сайтов

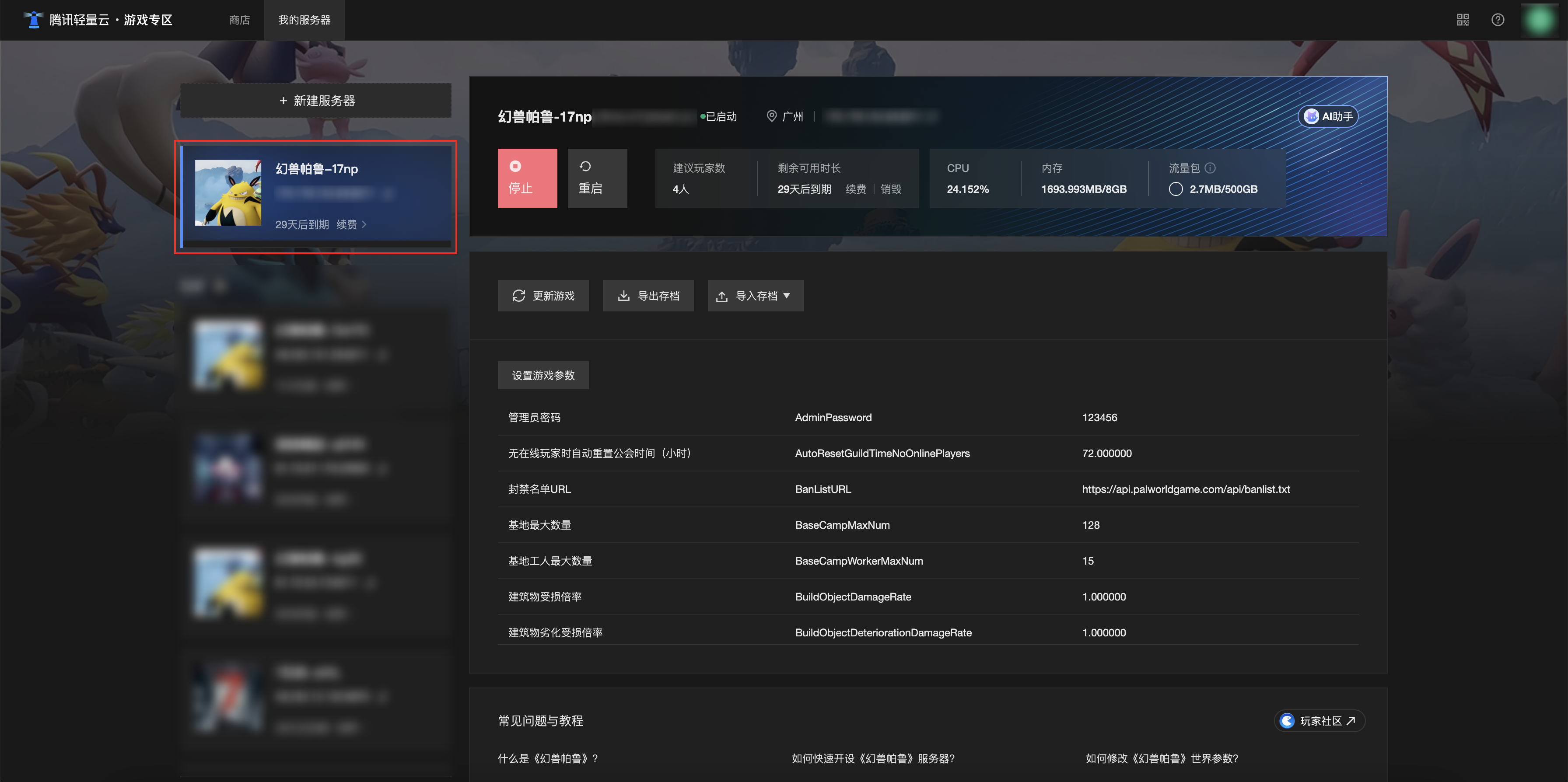

[Зона легкого облачного игрового сервера] Управление игровыми архивами